Introduction

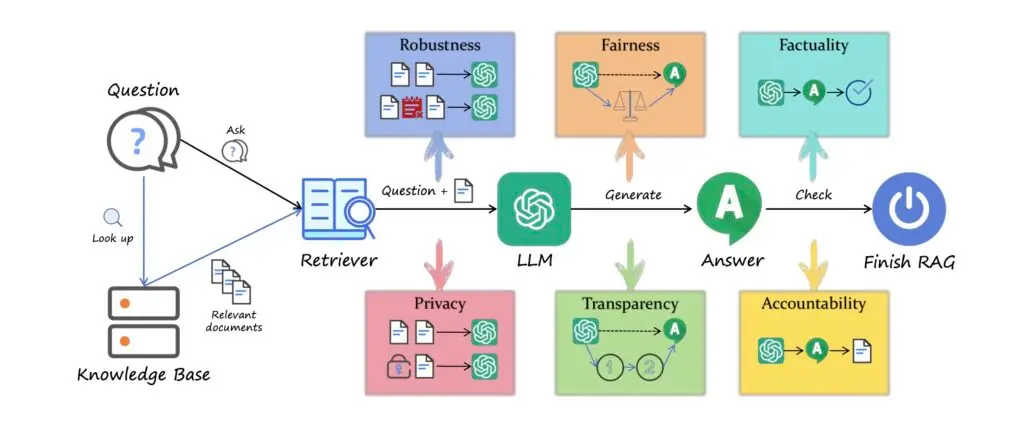

Retrieval-Augmented Generation (RAG) systems have become transformative in advancing large language models (LLMs) by integrating external information retrieval to enhance factual accuracy and reduce common issues like hallucinations. Despite these advantages, the trustworthiness of RAG systems remains under-explored. This analysis delves into a unified framework proposed to assess the trustworthiness of RAG systems across six critical dimensions: factuality, robustness, fairness, transparency, accountability, and privacy. Understanding these facets is essential for researchers, developers, and users who rely on these systems in high-stakes applications such as healthcare, finance, and law. This framework provides a structured approach for evaluating and improving trustworthiness, ensuring that RAG systems can be reliable and ethical in their real-world deployment.

1. Factuality

Factuality is paramount for the credibility of any language model, and it remains the most crucial capability for ensuring trustworthy outputs. For LLMs in RAG contexts, factuality involves synthesizing both internal knowledge and retrieved external content to provide accurate and logically consistent responses. Key challenges include:

- Conflicts Between Internal and External Knowledge: RAG systems must discern discrepancies between pre-trained internal data and newly retrieved documents. Effective prioritization mechanisms are necessary to align outputs with reliable, up-to-date sources.

- Noise in Retrieved Documents: Retrieval components may introduce irrelevant or outdated information. Robust systems need mechanisms to filter this noise and maintain high response accuracy.

- Handling Long Contexts: Complex, multi-hop queries that require inference across long documents can challenge the model’s ability to maintain coherence and accuracy. Enhanced information filtering and reasoning capabilities are critical.

2. Robustness

Robustness ensures that RAG systems maintain stable performance across various input conditions and threats. This dimension covers:

- Signal-to-Noise Ratio: The model’s ability to prioritize relevant content from noisy retrievals and maintain output quality.

- Granularity and Order: Robust RAG systems should effectively process details at different levels and synthesize responses regardless of the sequence of retrieved information.

- Misinformation Management: Models need the capability to identify and mitigate the influence of misinformation within retrieved content to avoid generating misleading outputs.

Research has shown that advanced retrieval and generation mechanisms, such as adaptive retrieval strategies and iterative reasoning approaches, significantly enhance robustness.

3. Fairness

Fairness addresses the need for equitable treatment in outputs, ensuring that biases are minimized. This dimension is particularly complex for RAG systems due to:

- Knowledge Source Imbalance: External knowledge bases may disproportionately represent specific demographics or perspectives, introducing biases into the model’s outputs.

- Algorithmic Bias in Retrieval: Algorithms prioritizing popular or recent sources may inadvertently reinforce biases and limit diverse perspectives.

- Information Integration: The model’s process for integrating retrieved content should include checks for fair representation and avoid amplifying pre-existing biases.

Strategies like diverse data sampling, debiasing algorithms, and fairness-aware retrieval mechanisms can mitigate these risks.

4. Transparency

Transparency enhances user trust by making the decision-making processes of RAG systems more understandable. Key aspects include:

- Retrieval Process Clarity: Transparency in how documents are retrieved and ranked allows users to understand the source and reliability of information.

- Integration Methodology: Detailing how retrieved information is fused with user queries provides insights into the model’s logic and reasoning.

- Traceability: Clear attribution of content to specific sources helps in verifying the output’s origins and accuracy.

Frameworks like RAG-Ex and tools for intermediate process visualization have improved transparency, aiding users in comprehending how outputs are generated.

5. Accountability

Accountability mechanisms ensure that RAG systems can be held responsible for their outputs and the sources used. This involves:

- Knowledge Attribution: Embedding citations within outputs provides verifiable references, bolstering the reliability of the information.

- Audit Trails: Implementing systems to trace content back to specific documents in the retrieval process enhances oversight and correction capabilities.

- Source Validation: Utilizing natural language inference (NLI) and test-time adaptation strategies to verify that cited sources align with outputs.

Research projects like WebGPT and tools employing knowledge validation models have made significant strides in embedding accountability into RAG systems.

6. Privacy

Privacy protection ensures that RAG systems do not compromise sensitive user data. Key considerations include:

- Data Handling and Retrieval Risks: Retrieval processes may inadvertently expose personal or sensitive data stored within knowledge bases.

- Attack Vectors: Techniques such as prompt injection and knowledge poisoning reveal potential vulnerabilities in RAG systems.

- Privacy-Preserving Mechanisms: Approaches like differential privacy and strategic data mixing can mitigate risks associated with private data exposure.

Studies have demonstrated that even under black-box scenarios, attackers can exploit RAG systems to extract sensitive data. Thus, ongoing enhancements in privacy safeguards are critical.

Conclusion

The trustworthiness of RAG systems is multifaceted, encompassing factuality, robustness, fairness, transparency, accountability, and privacy. This unified framework not only highlights the current state of research but also underscores the need for comprehensive evaluation and development efforts to improve these systems’ reliability. As RAG technology continues to evolve, stakeholders must prioritize these dimensions to ensure responsible and ethical AI deployment. Understanding and implementing this framework can guide developers and researchers in creating more trustworthy RAG systems that align with societal expectations and practical applications.